Introduction

Throughout this article, we let G be a subgroup of generated by a subset

We wish to consider the following questions.

- Given A, how do we compute the order of G?

- How do we determine if an element

lies in G?

- Assuming

, how do we represent g as a product of elements of A and their inverses?

In general, the order of G is comparable to even for moderately-sized A so brute force is not a good solution.

We will answer the first two questions in this article. The third is trickier, but there is a nice algorithm by Minkwitz which works for most practical instances.

Application

We can represent the group of transformations of the Rubik’s cube as a subgroup of generated by a set S of 6 permutations. The idea is to label each non-central unit square of each face by a number; a transformation of the Rubik’s cube then corresponds to a permutation of these 6 × 8 = 48 unit squares.

[Image from official Rubik’s cube website.]

Our link above gives the exact order of the Rubik’s cube group (43252003274489856000), as computed by the open source algebra package GAP 4. How does it do that, without enumerating all the elements of the group? The answer will be given below.

Schreier-Sims Algorithm

To describe the Schreier-Sims algorithm, we use the following notations:

is some subset represented in the computer’s memory;

is a subgroup of

;

- G acts on the set

Let us pick some random element and consider its orbit

under G. From the theory of group actions, we have

where is the isotropy group of k. Now it is easy to compute the orbit of k: we start by setting the orbit to be the singleton {k}, then expand it by letting elements of A act on elements of this set. The process stops if we can’t add any more elements via this iteration. A more detailed algorithm will be provided later.

Thus, if we could effectively obtain a set of generators for , our task would be complete since we could recursively apply the process to

. [Or so it seems: there’ll be a slight complication.]

For that, we pick a set U of representatives for the left cosets as follows. For each

, we pick some element

which maps

, and for j = k we pick the identity. To facilitate this process, we use a data structure called a Schreier vector.

Schreier Vector for Computing Orbits

Warning: our description of the Schreier vector differs slightly from the usual implementation, since we admit the inverse of a generator.

Let us label the elements of the generating set

- Initialize an array (v[1], v[2], …, v[n]) of integers to -1.

- Set v[k] := 0.

- For each i = 1, 2, …, n:

- If v[i] = -1 we ignore the next step.

- For each r = 1, 2, …, m, we set g = gr:

- set j := g(i); if v[j] = -1, then set v[j] = 2r-1;

- set j := g-1(i): if v[j] = -1, then set v[j] = 2r.

- Repeat the previous step until no more changes were made to v.

The idea is that v contains a “pointer” to an element of A (or its inverse) which brings it one step closer to k.

Example

Suppose we have elements

Labelling and

, we obtain the following Schreier vector for k=1:

Thus the orbit of 1 is {1, 2, 3, 4, 5, 6, 7, 9}. To compute an element which maps 1 to, say, 9, the vector gives us Hence we can pick the following for U

Key Lemma

Next, we define the map which takes

to the unique

such that

(i.e. u is the unique element of U satisfying u(k) = g(k)). Our main result is:

Schreier’s Lemma.

The subgroup

is generated by the following set

Proof

First note that takes k to itself: indeed

by definition takes k to

Thus we see that each

as desired so

.

Next, observe that B is precisely the set of all for

and

which lies in

Indeed for such an element, u’ must be the unique element of U which maps k to

and so

Now suppose ; we write it as a product of elements of A and their inverses:

We will write

where are elements to be recursively chosen. Specifically we start with

, and for each

we set

. Note that each term in parentheses is an element of

Thus, the expression lies in

So we have . Since

, this gives

as well so we have obtained h as a product of elements of B and their inverses. ♦

Example

Consider the subgroup generated by

If we pick k = 1, its orbit is For the coset representatives U, we take:

Now the subgroup is generated by the following 6 elements:

Let be the subgroup generated by these 6 elements; after removing the identity elements we are left with 4. Now if we pick k = 2 next, we obtain 5 representatives for the next U and thus, we obtain up to 20 generators for the stabilizer of {1, 2} in G.

Problem

The number of generators for the stabilizer groups seems to be ballooning: we started off with 2 examples, then expanded to 6 (but trimmed down to 4), then blew up to 20 after the second iteration.

Indeed, if we naively pick over all

, then the number of generator increases

times, while the order of the group decreases

times as well. Thus, at worst, the number of generators is comparable to the order of the group, which is unmanageable.

Thankfully, we have a way to pare down the number of generators.

Sims Filter

Sims Filter achieves the following.

Task.

Given a set

, there is an effective algorithm to replace

by some

satisfying

and

Let us explain the filter now. For any non-identity permutation , let

be the pair (i, j) with

such that

for all

and

Now we will construct a set B such that and the elements

all have distinct

It thus follows that

- Label

- Prepare a table indexed by (i, j) for all

. Initially this table is empty.

- For each

, if

we drop it.

- Otherwise, consider

. If the table entry at (i, j) is empty, we fill

in. Otherwise, if the entry is

, we replace

with

and repeat step 3.

- Note that this new group element takes i to i so if it is non-identity, we have

with

- Note that this new group element takes i to i so if it is non-identity, we have

After the whole process, the entries in the table give us the new set B. Clearly, we have

Example

As above, let us take A = {a, b} with

First step: since J(a) = (1, 5) we fill the element a in the table at (1, 5).

Second step: we also have J(b) = (1, 5), so now we have to replace b with

Now we have J(b’) = (2, 6) so this is allowed.

Summary and Applications

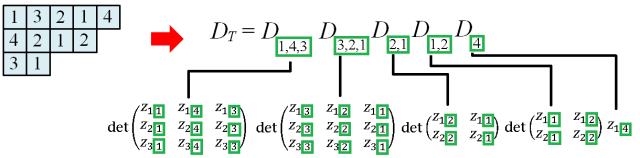

In summary, we denote and

- For i = 1, 2, …

- Pick a point

not picked earlier.

- Let

be the stabilizer group for

under the action of

From

, we use Schreier’s lemma to obtain a generating set for

- Use Sims filter to reduce this set to obtain

of at most

elements.

- If

is empty, quit.

- Pick a point

Thus are distinct such that each of the groups

has an explicit set of generators of at most elements. Here are some applications of having this data.

1. Computing |G|.

It suffices to compute for each i. Since

is the stabilizer group for the action of

on

we need to compute the size of the orbit

for each i. Since we have a generating set for each

, this is easy.

2. Determining if a given  lies in G.

lies in G.

To check if we first check whether

lies in the orbit

If it weren’t,

Otherwise we pick some

such that

Replacing g with

, we may thus assume that

, and it follows that

if and only if

Thus we can solve the problem inductively.

Generalizing, we can determine if is a subgroup of

, when H and G are given by explicit sets of generators.

3. Checking if  is a normal subgroup of

is a normal subgroup of

Writing and

, we claim that H is normal in G if and only if:

First we fix Since

and

, we see that

But both groups are of the same finite cardinality so equality holds. Thus

as well. It follows that

for all