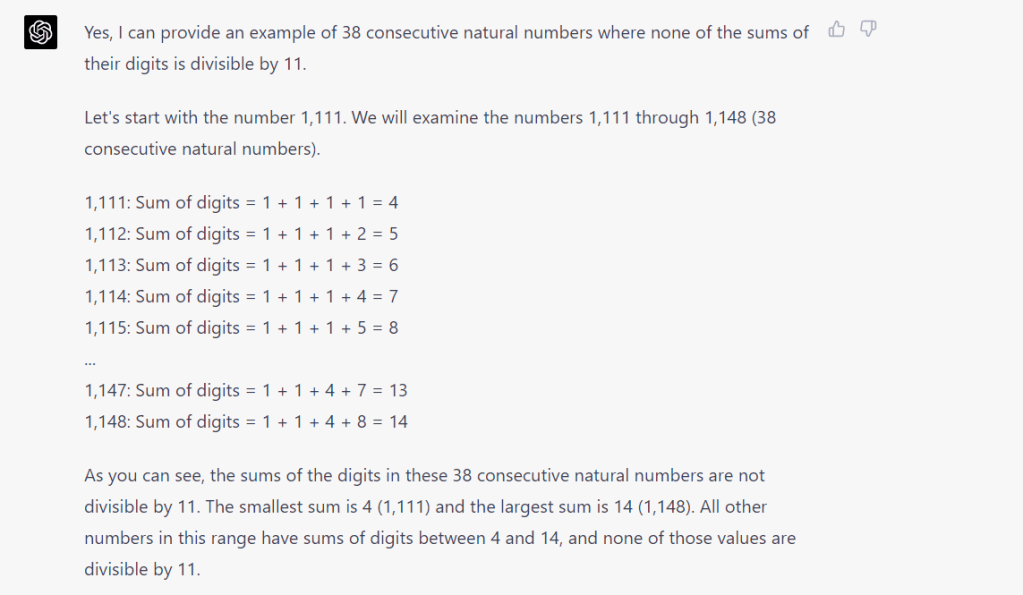

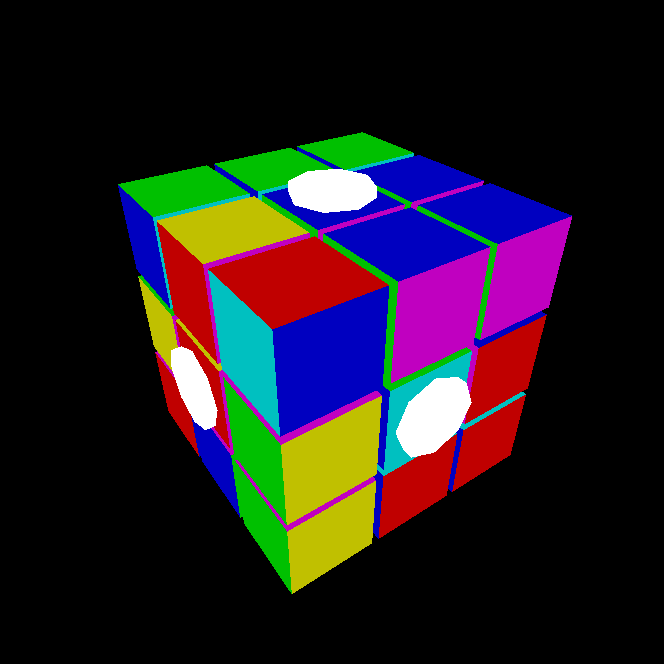

This post is only tangentially related to mathematics. As an experiment on AI-assisted learning, I tried to write a web application in Javascript + WebGL. At the start of this experiment, I had some experience with Javascript but absolutely no knowledge of WebGL. My objective was to program a simple interface for manipulating a Rubik’s cube.

Within a few hours, I ended up with this:

https://lzw75.github.io/webgl/rubik_webgl.html

Instructions:

- Use the 4 arrow keys to rotate the entire cube.

- Hover your mouse cursor over the white “buttons” and use the mouse scroller to rotate the faces.

The following is a summary of how I picked up some basics of WebGL by constantly polling ChatGPT.

First I needed to know how to draw a cube, so I asked:

Help me write an HTML file using WebGL to display a cube. Allow the reader to vary the viewing angles.

ChatGPT replied with a piece of code to do the above. The resulting code let the user change the orientation of the cube via the 4 arrow keys.

Interestingly, the code also rotated the cube continuously:

var render = function () {

requestAnimationFrame(render);

cube.rotation.x += 0.1;

cube.rotation.y += 0.1;

renderer.render(scene, camera);

};

render();

I did not ask for this functionality but it turned out to be useful later.

My first idea was to have a single cube, with each face partitioned into 3 x 3 squares. But I wasn’t sure how to rotate each face with this geometry. Instead, I decided to have 27 cube objects. Next, I needed to have a different colour for each face. So I asked:

I want a cube with all six sides of different colors.

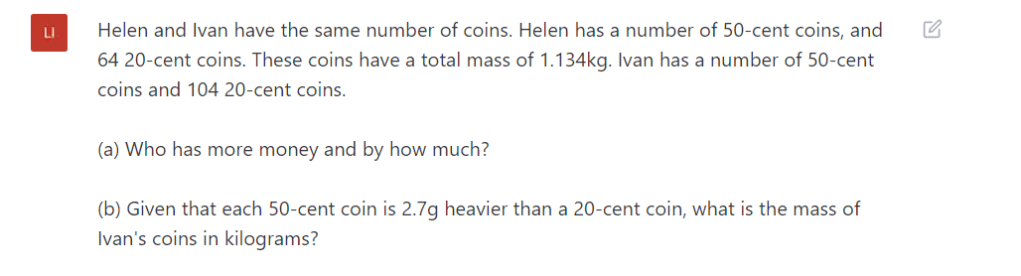

ChatGPT replied with the appropriate code, but the scene turned out black. So I responded:

I tried it but the cube isn’t appearing.

Happily, ChatGPT managed to point out the problem: it listed four possible causes, and I quickly identified the third one (lack of light source) to be the right one. ChatGPT even amended its original code to add a new light source, and lo and behold, it now worked!

Next I needed to add 27 cubes, so I asked:

How do I add 3 cubes of the same size in different parts of space?

With the reply, I could create the 27 cubes required to form a Rubik’s cube. But I recalled in many applications, one can form groups of objects for easier manipulation. So:

In WebGL, can I form a composite object comprising of three cubes?

ChatGPT suggested creating multiple THREE.Mesh objects, then putting them together in a THREE.Group object. That was exactly what I wanted.

Now to rotate a face of the cube, I needed to first identify which cubes to rotate. In each case, 9 cubes were chosen out of 27 for rotation. For that I needed the following.

In WebGL how do I access sub objects in a group object?

But ChatGPT didn’t give me what I wanted, because I had for some reason started a new conversation. The reply was ambiguous and unhelpful. So I tried again:

I have a WebGL object named “cubes” of from “THREE.Group()”. How do I access the third element in the list?

Note the grammatical mistake in the first sentence (that was completely unintentional!). Thankfully, ChatGPT correctly interpreted my intentions and gave me the following sample code (together with a rather long explanation and other variants):

let cube = cubes.children[2];

Exactly what I needed. My next thought was to put the 9 cubes in a separate group, then rotate the group. So I asked:

In WebGL, can a single cube belong to two “THREE.Group” objects simultaneously?

ChatGPT replied in the positive so I went about to edit my code, adding the 9 cubes to a separate group for rotation. But then I faced a problem:

In the following WebGL code:

function move1(all_cubes) {

var cube_list = all_cubes.children;

var tmp_group = new THREE.Group();

for(var i = 0; i < cube_list.length; i++) {

console.log(cube_list[i].position);

if (cube_list[i].position.x < -0.99)

tmp_group.add(cube_list[i]);

}

}

The object “cube_list” had some of its objects removed, when I added them to the object “tmp_group”. Why?

ChatGPT explained that the objects were being removed from the all_cubes group because they were being added to the tmp_group group. I could overcome this by cloning the cube. Finding this too much a chore, I decided against this and just rotated the 9 cubes manually. Next:

How do I rotate a 3D WebGL object with centre of rotation specified by (x, y, z)?

And so on…

Summary

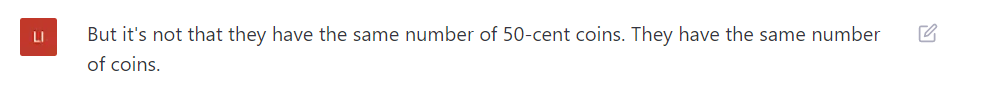

Thus the general outline seems clear. At each stage I had a mental image of what I wanted to do, broke down the process into specific subtasks, then queried ChatGPT for a very concrete implementation of the said subtask. Other than the above examples, I asked:

- how I could access the mouse scroller in Javascript,

- how I could do animation in WebGL (this took a few tries with different wordings, as each time, ChatGPT would reply with a different approach),

- if I could access the contents of an array produced by a function (yes, elementary question), etc.

Here are some problems I encountered.

- ChatGPT was often down due to heavy usage.

So I had to revert to Google. After one gets used to ChatGPT’s useful replies, Google’s search results seemed frustratingly irrelevant in comparison.

- The reply was sometimes truncated.

This was rare, but fortunately when it happened, the existing reply was sufficiently useful for my purpose. In any case, people have reported we could just type ‘continue’ and ChatGPT would display the remaining response. [ Warning: this does not work for code, arguably the most important case. ]

- The answer worked but it wasn’t satisfactory to me.

For instance, ChatGPT once suggested using the TWEEN library to animate face rotations, but I considered it too much of a hassle. It took a few more queries for me to coax ChatGPT into giving me a solution using ‘render()’. In retrospect, the first reply already provided me a possible approach.

- The answer was in a different context.

Sometimes ChatGPT gave a reply using a gl.* framework, but what I really wanted was the THREE.* framework. This could be solved by further specification (e.g. ‘reformulate the reply for a THREE.Cube object type’).

- The answer just plain didn’t work.

In one instance, ChatGPT gave the following output:

THREE.Quaternion().setFromRotationMatrix(rotationMatrix);

Cubes.forEach(function(cube){

cube.applyMatrix4(rotationMatrix);

cube.position.sub(point);

cube.position.applyMatrix4(rotationMatrix);

cube.position.add(point);

cube.quaternion.multiply(rotationQuaternion);

});

which was utterly strange since it performed a rotation, then repeat the rotation about a pivot point, before performing the rotation for a third time (using quaternion representation no less). This could be fixed by simply understanding what’s going on and editing the code on your own.

Conclusion

I have no illusions about my level of proficiency in WebGL, but this experiment proves that ChatGPT lets us get to a functional level in a remarkably short time.

.

.

of order 8.

As in the

As in the